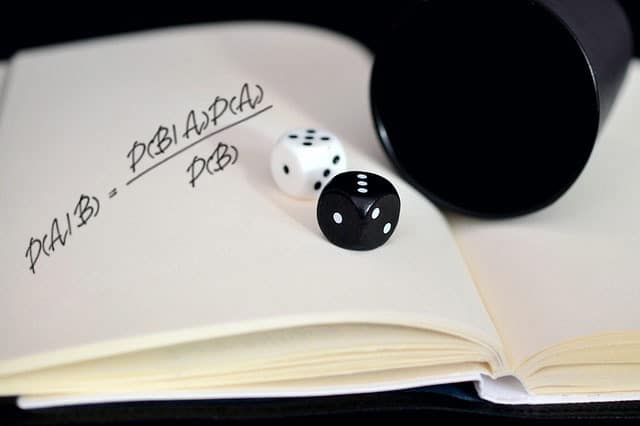

Why do people flip coins to resolve disputes? It usually happens when neither of two sides wants to compromise with the other about a particular decision. They choose the coin to be the unbiased agent that decides whose way things are going to go. The coin is an unbiased agent because the two possible outcomes of the flip (heads and tails) are equally likely to occur.

Intuitive Explanation of P-Values

If you ever took an introductory course in statistics or attempted to read a publication in a scientific journal, you know what p-values are. Оr at least you’ve seen them. Most of the time they appear in the “results” section of a paper, attached to claims that need verification. For example:

- “Ratings of the target person’s ‘dating desirability’ showed the predicted effect of prior stimuli, […], p < 002.”

The stuff in the square brackets is usually other relevant statistics, such as the mean difference between experimental groups. If the p-value is below a certain threshold, the result is labeled “statistically significant” and otherwise it’s labeled “not significant”. But what does that mean? What is the result significant for? And for whom? What does all of that say about the credibility of the claim preceded by the p-value?

There are common misinterpretations of p-values and the related concept of “statistical significance”. In this post, I’m going to properly define both concepts and show the intuition behind their correct interpretation.

If you don’t have much experience with probabilities, I suggest you take a look at the introductory sections of my post about Bayes’ theorem, where I also introduce some basic probability theory concepts and notation.

The Inverse Problem and Bayes’ Theorem

What will happen if you grab a solid rock and throw it at your neighbor’s window? The most common result is that the window will break. If your neighbor later asks if you know anything about the incident, you can confidently inform him that his window was broken because you threw a rock at it earlier. Cause and effect — sounds pretty straightforward.

But what if it wasn’t you who broke the window and, in fact, you have no idea what broke it? Did someone else throw a rock at it? Was there a large temperature difference between the center and the periphery of the glass which caused a spontaneous breakage? Or was it a spontaneous breakage caused by a fabric defect?

You see, unlike the previous question, this one is actually not straightforward to answer. It involves solving the sо-called inverse problem: inferring the causes of a particular effect.

Bayes’ Theorem: An Informal Derivation

If you’re reading this post, I’ll assume you are familiar with Bayes’ theorem. If not, take a look at my introductory post on the topic.

Here I’m going to explore the intuitive origins of the theorem. I’m sure that after reading this post you’ll have a good feeling for where the theorem comes from. I’m also sure you will find the simplicity of its mathematical derivation impressive. For that, some familiarity with sample spaces (which I discussed in this post) would come in handy.

So, what does Bayes’ theorem state again?

What Is a Sample Space?

For example, the sample space of the process of flipping a coin is a set with 2 elements. Each represents one of the two possible outcomes: “heads” and “tails”. The sample space of rolling a die is a set with 6 elements and each represents one of the six sides of the die. And so on. Other terms you may come across are event space and possibility space.

Before getting to the details of sample spaces, I first want to properly define the concept of probabilities. [Read more…]

What Is Bayes’ Theorem? A Friendly Introduction

While this post isn’t about listing its real-world applications, I’m going to give the general gist for why it has such potential in the first place.

Alright, let’s get to it.

- « Previous Page

- 1

- …

- 3

- 4

- 5