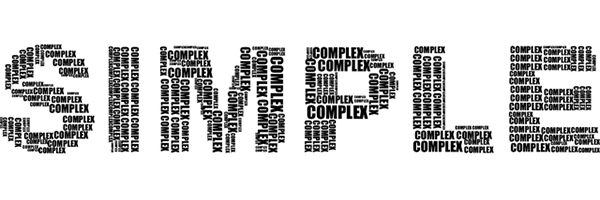

“Simple explanations are better than complex explanations.” — have you heard this statement before? It’s the most simplified version of the principle called Occam’s razor. More specifically, the principle says:

A simple theory is always preferable to a complex theory, if the complex theory doesn’t offer a better explanation.

Does it make sense? If it’s not immediately convincing, that’s okay. There have been debates around Occam’s razor’s validity and applicability for a very long time.

In this post, I’m going to give an intuitive introduction to the principle and its justification. I’m going to show that, despite historical debates, there is a sense in which Occam’s razor is always valid. In fact, I’m going to try to convince you this principle is so true that it doesn’t even need to be stated on its own.

In my

In my