This is a bonus post for my main post on the binomial distribution. Here I want to give a formal proof for the binomial distribution mean and variance formulas I previously showed you.

This post is part of my series on discrete probability distributions.

In the main post, I told you that these formulas are:

For which I gave you an intuitive derivation. The intuition was related to the properties of the sum of independent random variables. Namely, their mean and variance is equal to the sum of the means/variances of the individual random variables that form the sum. We could prove this statement itself too but I don’t want to do that here and I’ll leave it for a future post.

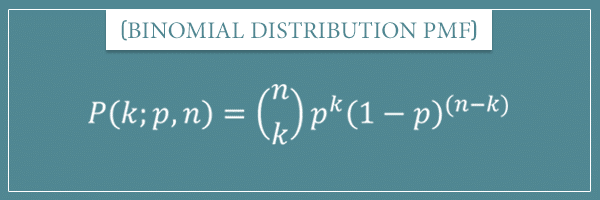

Instead, I want to take the general formulas for the mean and variance of discrete probability distributions and derive the specific binomial distribution mean and variance formulas from the binomial probability mass function (PMF):

To do that, I’m first going to derive a few auxiliary arithmetic properties and equations. We’re going to use those as pieces of the main proofs. But their usefulness is much bigger and you can apply them for many other derivations.

I think this post will be a great exercise for those of you who don’t have much experience in formal derivations of mathematical formulas. I’m going to be as explicit as I can and try to not skip even the smallest steps. So, don’t be scared by the quantity of equations in this post. I promise, you’ll be able to follow everything!

And if you happen to get stuck somewhere, I’m going to answer all of your questions in the comments. Don’t hesitate to ask me anything.

Table of Contents

Auxiliary properties and equations

To make it easy to refer to them later, I’m going to label the important properties and equations with numbers, starting from 1. These identities are all we need to prove the binomial distribution mean and variance formulas. The derivations I’m going to show you also generally rely on arithmetic properties and, if you’re not too experienced with those, you might benefit from going over my post breaking down the main ones.

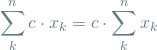

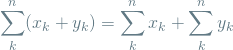

The first two equations are two important identities involving the sum operator which I proved in my recent post on the topic:

(1)

(2)

Second, in another recent post on different variance formulas I showed you the following alternative variance formula for a random variable X with mean M:

(3)

It’s a pretty nice formula used in many derivations, not just the ones I’m about to show you (for more intuition, check out the link above). According to this formula, the variance can also be expressed as the expected value of

A property of the binomial coefficient

Finally, I want to show you a simple property of the binomial coefficient which we’re going to use in proving both formulas. Remember the binomial coefficient formula:

The first useful result I want to derive is for the expression

Therefore:

Now let’s do something else. One of the simplest properties of the factorial function is:

I want to use this to derive the main property of binomial coefficients we’re interested in here. First, let’s use it to rewrite the right-hand side in the following way:

And using the commutative property of multiplication (

Now let’s say we start with another expression:

In the last step, I simply canceled out the two k’s. Finally, using

(4)

And with all that out of the way, let’s get to the main proofs of today’s post!

Mean of binomial distributions derivation

Well, here we reach the main point of this post! Let’s use these equations and properties to derive the formulas we’re interested in.

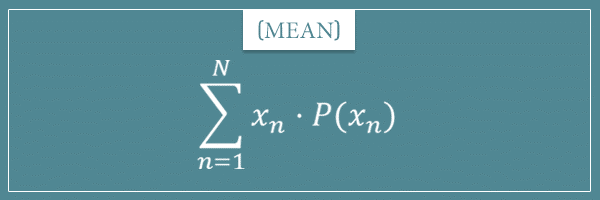

First is the mean. Here’s the general formula again:

Let’s plug in the binomial distribution PMF into this formula. To be consistent with the binomial distribution notation, I’m going to use k for the argument (instead of x) and the index for the sum will naturally range from 0 to n. So, with in mind, we have:

But notice that when k = 0 the first term is also zero and doesn’t contribute to the overall sum. Therefore, we can also write the formula by having the index start from k = 1:

Now let’s see how we can manipulate the right-hand side to get the desired

Proof steps

The first step in the derivation is to apply the binomial property from equation (4) to the right-hand side:

In the second line, I simply used equation (1) to get n out of the sum operator (because it doesn’t depend on k).

Next, we’re going to use the product rule of exponents:

A special case of this rule is:

And we’re going to use it to rewrite

Again, in the last line I simply took out the constant term p outside of the sum operator. Finally, let’s apply the identity

The final proof

Believe it or not, we’re almost done here. Notice that, after the last manipulation, there’s a lot of terms like n – 1 and k – 1 inside the sum operator. To make the expression a little more readable, let’s rewrite it by applying the following variable substitutions:

This results in:

Here j starts from 0 because j = k – 1 (the k index used to start from 1 before the variable substitution). And because the number of terms in the sum must be preserved, the index runs until n – 1 = m.

Now, do you recognize the term inside the sum operator?

It looks exactly like the binomial PMF, doesn’t it? Only k has been replaced with j and n with m. And since the sum is from 0 to m, this is simply the sum of probabilities of all outcomes, right? Then, by definition:

And plugging this last result into what we have so far, we get:

Therefore, we can now confidently state:

Q.E.D.

Variance of binomial distributions derivation

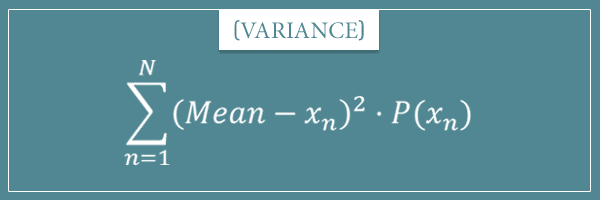

Now it’s time to prove the variance formula. Remember the general variance formula for discrete probability distributions:

Like before, for the argument of the PMF I’m going to use k, instead of x. Furthermore, I’m going to use the alternative variance formula from equation (3) we derived earlier:

Using the result from the previous section:

And we can plug in

We already solved half of the problem! Now let’s focus on the

Like before, when k = 0 the first term in the sum becomes zero again. So we can similarly write the same sum with the index starting from 1:

With this setup, let’s start with the actual proof. The focus is going to be on manipulating the last equation. When we’re done with that, we’re going to plug in the final result into the main formula.

You’ll see that the mathematical tricks we use are going to be very similar to the ones we used in the previous proof.

Proof steps

First, using equation (4), let’s rewrite the part inside the sum as:

Plugging this in and taking the constant term n out of the sum operator, we get:

Next, let’s use the

Simplifying the sum

Now let’s ignore the constant product np for a moment and just focus on the sum. Let’s apply the same variable substitution rules as before:

to rewrite the sum as:

Next, let’s use equation (2) to split this sum into two sums by expanding

Well, these individual sums are nothing but the expected value and the sum of probabilities of a binomial distribution:

Therefore, the final sum reduces to:

And when we plug this into the full expression for

Which we can rewrite as:

So, finally we get:

The final proof

We’re at the homestretch. Let’s remember what we started with.

In the previous section we established that:

Therefore:

Which is what we wanted to prove!

Q.E.D.

Summary

In this post, I showed you a formal derivation of the binomial distribution mean and variance formulas. This is the first formal proof I’ve ever done on my website and I’m curious if you found it useful. Let me know if it was easy to follow.

Before the actual proofs, I showed a few auxiliary properties and equations.

The two properties of the sum operator (equations (1) and (2)):

An alternative formula for the variance of a random variable (equation (3)):

The binomial coefficient property (equation (4)):

Using these identities, as well as a few simple mathematical tricks, we derived the binomial distribution mean and variance formulas. In the last two sections below, I’m going to give a summary of these derivations.

I know there was a lot of mathematical expression manipulation, some of which was a little bit on the hairy side. However, I’m firmly convinced that even less experienced readers can understand these proofs. If you struggled to follow any part of this post (or even the post as a whole), don’t hesitate to ask me any question!

By the way, if you’re new to mathematical proofs but find it an interesting subject, check out this Wikipedia article on mathematical proofs which gives a good overview of the subject.

Mean of binomial distributions proof

We start by plugging in the binomial PMF into the general formula for the mean of a discrete probability distribution:

Then we use

Finally, we use the variable substitutions m = n – 1 and j = k – 1 and simplify:

Q.E.D.

Variance of binomial distributions proof

Again, we start by plugging in the binomial PMF into the general formula for the variance of a discrete probability distribution:

Then we use

Next, we use the variable substitutions m = n – 1 and j = k – 1:

Finally, we simplify:

Q.E.D.

![Rendered by QuickLaTeX.com \[\textrm{Variance(X)} = \sum_{n=1}^{N} (M - x_n)^2 \cdot P(x_n)\]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-ea19686f01c188a9418e05028bb85ec1_l3.png)

![Rendered by QuickLaTeX.com \[ \mathop{\mathbb{E}[K] = \sum_{k=0}^{n} k \cdot \binom{n}{k} p^k(1-p)^{n-k} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-654a83f4b1642be770e0dc6ba8f76fa6_l3.png)

![Rendered by QuickLaTeX.com \[ \mathop{\mathbb{E}[K] = \sum_{k=1}^{n} k \cdot \binom{n}{k} p^k(1-p)^{n-k} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-c1977dfcb9bc630ebc5f8b278d8bb610_l3.png)

![Rendered by QuickLaTeX.com \[ \mathop{\mathbb{E}[K] = \sum_{k=1}^{n} n \cdot \binom{n-1}{k-1} p^k(1-p)^{n-k} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-3c4a0cea39ac8e910c833aa382638248_l3.png)

![Rendered by QuickLaTeX.com \[ = n \cdot \sum_{k=1}^{n} \binom{n-1}{k-1} p^k(1-p)^{n-k} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-1511e10ed38433c40af6fd263e97f843_l3.png)

![Rendered by QuickLaTeX.com \[ \mathop{\mathbb{E}[K] = n \cdot \sum_{k=1}^{n} \binom{n-1}{k-1} pp^{k-1}(1-p)^{n-k} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-c47f74d8267065dab0b7f2576539baf1_l3.png)

![Rendered by QuickLaTeX.com \[ = np \cdot \sum_{k=1}^{n} \binom{n-1}{k-1} p^{k-1}(1-p)^{n-k} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-7b4ac340096b142406ccca74a6dd5e6b_l3.png)

![Rendered by QuickLaTeX.com \[ \mathop{\mathbb{E}[K] = np \cdot \sum_{k=1}^{n} \binom{n-1}{k-1} p^{k-1}(1-p)^{(n - 1) - (k - 1)} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-b79a869f568a88ae8ce60059169f0f45_l3.png)

![Rendered by QuickLaTeX.com \[ \mathop{\mathbb{E}[K] = np \cdot \sum_{j=0}^{m} \binom{m}{j} p^j(1-p)^{m-j} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-dcf67bf4015cf7700ebe3d13942a7dc0_l3.png)

![Rendered by QuickLaTeX.com \[ \sum_{j=0}^{m} \binom{m}{j} p^j(1-p)^{m-j} = 1 \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-68cc13ae12ef1fbb77e751c714917925_l3.png)

![Rendered by QuickLaTeX.com \[ \mathop{\mathbb{E}[K^2] = \sum_{k=0}^{n} k^2 \cdot \binom{n}{k} p^k(1-p)^{n-k} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-c7ed807f68edf3219b8cba9e95517a34_l3.png)

![Rendered by QuickLaTeX.com \[ \mathop{\mathbb{E}[K^2] = \sum_{k=1}^{n} k^2 \cdot \binom{n}{k} p^k(1-p)^{n-k} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-938949827d4bcd5103e5ea663d21ef7b_l3.png)

![Rendered by QuickLaTeX.com \[ \mathop{\mathbb{E}[K^2] = n \cdot \sum_{k=1}^{n} k \cdot \binom{n-1}{k-1} p^k(1-p)^{n-k} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-efaa46c58b0a31999ac69e2e3d8f3e95_l3.png)

![Rendered by QuickLaTeX.com \[ \mathop{\mathbb{E}[K^2] = np \cdot \sum_{k=1}^{n} k \cdot \binom{n-1}{k-1} p^{k-1}(1-p)^{n-k} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-9edd47bce809a6ccbefe93f4f02f0d65_l3.png)

![Rendered by QuickLaTeX.com \[ = np \cdot \sum_{k=1}^{n} k \cdot \binom{n-1}{k-1} p^{k-1}(1-p)^{(n-1) - (k-1)} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-0958e07f172bc4e60e59fbfee0c1460e_l3.png)

![Rendered by QuickLaTeX.com \[ \sum_{k=1}^{n} k \cdot \binom{n-1}{k-1} p^{k-1}(1-p)^{(n-1) - (k-1)} = \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-76c9bec117c68cf85595a3f456e888ec_l3.png)

![Rendered by QuickLaTeX.com \[ \sum_{j=0}^{m} (j + 1) \cdot \binom{m}{j} p^j(1-p)^{m-j} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-8eef1be4e217ff4039cf871dc8997b31_l3.png)

![Rendered by QuickLaTeX.com \[ = \sum_{j=0}^{m} j \cdot \binom{m}{j} p^j(1-p)^{m-j} + \sum_{j=0}^{m} \binom{m}{j} p^j(1-p)^{m-j} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-02796d885f851fe2e14e62f8056e9820_l3.png)

![Rendered by QuickLaTeX.com \[ \sum_{j=0}^{m} j \cdot \binom{m}{j} p^j(1-p)^{m-j} = mp = (n-1)p \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-2ec46be6c1494ebc1ab711487b466646_l3.png)

![Rendered by QuickLaTeX.com \[ \sum_{j=0}^{m} (j + 1) \cdot \binom{m}{j} p^j(1-p)^{m-j} = (n-1)p + 1 \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-cd46a4447182d5bdb8d9f67e6fe3bc40_l3.png)

![Rendered by QuickLaTeX.com \[ \sum_{k}^{n} c \cdot x_k = c \cdot \sum_{k}^{n} x_k \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-6886ed0def4110cc2b46596c2cdf4232_l3.png)

![Rendered by QuickLaTeX.com \[ \sum_{k}^{n} (x_k + y_k) = \sum_{k}^{n} x_k + \sum_{k}^{n} y_k \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-ad698e9fd7f166ec29560107a7188491_l3.png)

![Rendered by QuickLaTeX.com \[ = \sum_{k=0}^{n} k \cdot \binom{n}{k} p^k(1-p)^{n-k} = \sum_{k=1}^{n} k \cdot \binom{n}{k} p^k(1-p)^{n-k} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-86a5b4d8d0b40078b759f72083012dd6_l3.png)

![Rendered by QuickLaTeX.com \[ = np \cdot \sum_{k=1}^{n} \binom{n-1}{k-1} p^{k-1}(1-p)^{(n - 1) - (k - 1)} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-1c3ad84f767564864b1b5f0850a78053_l3.png)

![Rendered by QuickLaTeX.com \[ = np \cdot \sum_{j=0}^{m} \binom{m}{j} p^j(1-p)^{m-j} \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-a33c57bf44f25c9b44ba9dc1f4aca608_l3.png)

![Rendered by QuickLaTeX.com \[ = \sum_{k=0}^{n} \left( k^2 \cdot \binom{n}{k} p^k(1-p)^{n-k} \right) - (np)^2 = \sum_{k=1}^{n} \left( k^2 \cdot \binom{n}{k} p^k(1-p)^{n-k} \right) - (np)^2 \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-b1df8a5b599ec4ad8edf65b1af33bbbd_l3.png)

![Rendered by QuickLaTeX.com \[ = n \cdot \sum_{k=1}^{n} \left( k \cdot \binom{n-1}{k-1} p^k(1-p)^{n-k} \right) - (np)^2 \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-46de73ecd08aa804fe68573edca2ad73_l3.png)

![Rendered by QuickLaTeX.com \[ = np \cdot \sum_{k=1}^{n} \left( k \cdot \binom{n-1}{k-1} p^{k-1}(1-p)^{(n - 1) - (k - 1)} \right) - (np)^2 \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-f9af2b509dadaa3a15008bb9f4c5d07c_l3.png)

![Rendered by QuickLaTeX.com \[ = np \cdot \sum_{j=0}^{m} \left( (j + 1) \cdot \binom{m}{j} p^j(1-p)^{m-j} \right) - (np)^2 \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-9ceda91185f6cdbd844a57aba7db3881_l3.png)

![Rendered by QuickLaTeX.com \[ = np \cdot \sum_{j=0}^{m} j \cdot \binom{m}{j} p^j(1-p)^{m-j} + np \cdot \sum_{j=0}^{m} \binom{m}{j} p^j(1-p)^{m-j} - (np)^2 \]](https://www.probabilisticworld.com/wp-content/ql-cache/quicklatex.com-13a50e3c620798820ca823a32a2a2e6d_l3.png)

Great job! I definitely found the proofs useful and easy to follow. The refresher on the various mathematical properties was a nice reminder (it’s been a while…). Thanks!

Thank you.

Excellent proof…Simple to understand

I find it difficult to understand some of the manipulations

Hey Andrew, until which part do you follow everything before you reach the first difficulty? Which step(s) in particular confuse you? Let me know and I’ll help you get through it.

thank you so much, it was thoroughly explained, what I don’t understand is why m replaced n and j replaced k

Hi Deinma, I’m glad you found the explanation helpful! The change of variables is really just for readability purposes. If a computer was doing the same proof, this step might be unnecessary.

Take a look at the final proof section for the mean, for example. In particular, notice the last expression for the mean formula just before that section. There are all these (n-1) and (k-1) terms inside the sum, right?

Now, do you recognize that this sum is the binomial distribution PMF formula? I personally might not immediately recognize it if I see it in this form. On the other hand, if we apply the variable substitution and

and  , the same sum becomes:

, the same sum becomes:

Which is an identical expression, but much easier to read. And now that we recognize it as the sum over all possible values of a PMF, we know the whole sum is equal to 1 and we can completely ignore it. In principle, I could have used the same letters (k and n) but that would introduce a different type of confusion when comparing the current expression to previous steps.

Variable substitution (also known as ‘change of variables’) is a very common technique in algebra and math in general. It’s typically used for simplifying expressions or putting expressions into a familiar form where we can then substitute the whole thing with a known formula (as was the case in the proofs here).

Let me know if I managed to clarify things for you.

Hi,

Can you explain why the upper index of summation should not be m+1 since

m=n-1. Note that the original summation had n as the upper index, hence

n=m+1 if we go by the substitution that you just enforced.

Otherwise, everything else is very insightful!

I like your proofs!

Symon

Hi Symon,

This is among the confusing parts of the post, it’s not just you. Could you take a look at my reply to Yuki’s question further down the page? I think you are asking the same thing. Of course, let me know if you still find something unclear, I’ll be happy to clarify.

Thanks

thank you

Small error: the second last step should be

np(n-1)p+np-(np)^2

Rather than

npn(p-1)+np-(np)^2

Oh yeah, another annoying typo 🙂 Thanks for pointing it out, Yan. Fixed!

Hi Andrew, thank you for this.

Right before reaching the end, when you write “Finally, we simplify:”, what do you do to the binomial coefficient? The same exact process you did before changing the variables? I mean, like now z = y- 1 and r = m – 1? And because of that, we know it sums up to 1?

Thanks again.

Hey Emilio,

My name isn’t Andrew, I’m assuming you confused it with one of the earlier commenters 🙂 No worries.

Yes, the change of variables is simply meant to put the sums into the canonical forms for the respective formulas (the PMF, the mean, etc.). It’s a step that people sometimes skip because they just spot the pattern and do the simplification in their heads.

Let me know if you need further clarification.

Very nice derivation and easy to follow. Thanks for the good work.

When you derive E[k^2], why don’t all k become k^2?

Hi Umi, I’m not sure I understand your question. Could you show the exact step at which you stop following?

Hi Umi,

I’ll follow up on this one as I am somewhat unclear on this also. If we consider the expected value of a BPD to be:

E[x] = \sum{x*P(X = x)}

Then why is k^2 not substituted into the probability function?

For example, the proof shows:

E[k^2] = \sum{k^2*P(X = k)}

whereas I was expecting:

x = k^2 \therefore E[k^2] = (k^2) * P(X = (k^2))

Does that make any sense where my confusion is coming from?

i did by other way, my confusion was for example in mean after substitution it is ok y starts from 0 but n must have been m-1 through the formula (x-1)=y but issue stands resolved with your comment that number of terms have to remain same.

In the proof of distribution mean, you let n-1 = m. Then, the end of the summation should be m+1 instead of m. So, I don’t get it.

Hi, Yuki. You’re trying to understand why the upper bound of the sum is m, instead of (m+1), correct?

Notice that after we made the variable substitution n – 1 = m, the lower bound of the sum also changes (from 1 to 0). Basically, the idea is to have an identical sum. Why is the sum running from j=0 to m the same as a sum running from k=1 to n? To see it more clearly, take a specific value for n. For example, n=3.

In the original sum, since k starts at 1 and ends at 3, the k’s in the expression at each iteration will be 1, 2, and 3. Which means that the (k-1) terms in the original sum will be 0, 1, and 2, respectively. Are you with me so far?

Then, when we apply the variable substitution, the sum runs from 0 to m. Because m = n – 1, we know the j’s will run from 0 to 2, right? And inside the sum, everywhere we had (k-1), we now have j instead. So, just like they were in the original sum, the respective values inside the sum term will be 0, 1, and 2. Furthermore, everywhere we had (n-1) in the original expression, now we have m, which is equal to n – 1. Therefore, we know the two expressions are identical, one is just a little tidier.

On the other hand, if we let the upper bound be m+1 instead, then we would have an extra term for j=3, which we didn’t have in the original sum.

Bottom line is, if we decrease the lower bound by 1, we also need to decrease the upper bound by 1, otherwise we will have an extra term (and, therefore, the two sum expressions will be different).

Does that make sense?

I have the same question.

Thank you!

i understood it very well

thank you

Hey. This was perfect. Thanks.

Clear and thoroughly explained. The derivation in my book was very ambiguous and I could not clearly understand it. But your derivation really cleared my concepts.

Thanks.

Thank you so much for this! It really helped me a lot

I love how you combine different formulas to prove something!

Wonderful proof!

Initially, I too got stumbled on m=n-1!

Thanks!

PKP

Very helpful, thank you.

Thanks for the detailed proof. I don’t get why would the sum of j from j = 0 to j = m equal to j tho.